News

New York Tech Faculty Receive NSF Grants to Fuel AI Innovation

August 24, 2020

How can machines detect real-life human emotions? How can deep learning process algorithms modeled after the human brain more efficiently?

Two researchers from NYIT College of Engineering and Computing Sciences received National Science Foundation (NSF) grants to tackle these questions and fuel the next wave of artificial intelligence (AI) innovation.

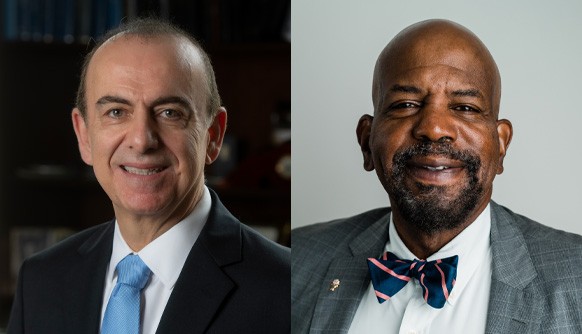

Supported by a grant for nearly $100,000, one research project led by Houwei Cao, Ph.D., assistant professor of computer science, aims to help machines detect human emotions, as they occur in real life, by gathering data from multiple forms of human emotion, such as facial expression, body movement, gestures, and speech. The other project, led by Assistant Professor of Computer Science Jerry Cheng, Ph.D., has secured $60,000 in NSF funding. Cheng’s project, which includes collaborators from leading universities, aims to design more efficient and secure deep learning processing machines, known as AI accelerators, that can reliably process and interpret extremely large-scale sets of data with little delay.

Both projects will also allow New York Tech students to work alongside the faculty members and contribute to solving complex tasks associated with collecting valuable data and developing cutting-edge technologies.

“These grants will enable our computer science faculty to perform cutting-edge research that is as important for the challenges they address as they are for the opportunities they afford New York Tech students and faculty collaborators at other leading universities,” said Babak D. Beheshti, Ph.D., dean of College of Engineering and Computing Sciences.

Enhancing Machine Detection of Human Emotion

In her work, Cao investigates how machines can be taught to use enormous data sets to “recognize” a person’s emotions and respond accordingly. However, in real life, automatic emotion recognition is often complicated by spontaneous, subtle expressive behaviors that occur between humans and their environments. Such complications include background noise, music, overlapping voices, lighting, and partially covered faces, among others. For this reason, the approach is often most effective when multiple modalities, or forms of expression, are analyzed.

With this funding, Cao will conduct a 12-month research project to address the challenges of understanding spontaneous emotions and imperfect audio and video signals in real-life versus controlled laboratory settings. She will use the data she collects to develop a new multimodal emotion recognition system for real-world applications. The project is expected to lead to significant advances in the benchmarking of next-generation affective computing.

“Analysis and recognition of spontaneous emotion is a challenging task,” says Cao. “Systems that work well with acted emotion datasets in laboratory environments may not work well for real-world applications ‘in-the-wild.’ We aim to design an emotion recognition system for real-life human-computer interaction applications, such as spoken dialogue systems, which are computer systems that converse with a human with voice, and conversational AI devices that use speech recognition.”

The research will also be instrumental in educating New York Tech undergraduate and graduate students, who can be involved through the Undergraduate Research and Entrepreneurship Program (UREP), the Research Experience for Undergraduate (REU) program, and via thesis projects.

Her project also has the potential to make a broader impact on healthcare, particularly mental healthcare, where multimodal data used to sense and monitor a patient’s emotional state may be collected from wearable or mobile devices and incorporated into a healthcare tracking system.

Developing Powerful AI Accelerators

Cheng is leading a research team, which will include graduate research assistants from New York Tech, as well as experts from Rutgers University, Temple University, and Indiana University. The group aims to design optimized, reliable AI accelerators that will interpret extremely large-scale deep-learning computations and models.

Deep learning is a type of machine learning that uses neural networks, or algorithms, loosely modeled after the human brain in order to recognize patterns. The neural networks detect sensory information, such as images, sound, and text, and store this layered data in numeric form. Like the human brain, each time the machine repeats a task, the layers become more fine-tuned, and results improve. However, few existing deep AI accelerators have been designed to ensure that very large-scale sets of data are accurately processed in a timely manner.

“Existing research has shown that large-scale data from various sources with high-resolution sensing or large-volume data-collection capabilities can significantly improve the performance of deep-learning approaches,” said Cheng. “However, today’s state-of-the-art hardware and software do not provide sufficient computing capabilities and resources to ensure accurate deep-learning performance in a timely manner when using extremely large-scale data. The project develops a scalable and robust system that includes a new low-cost, secure, deep-learning hardware-accelerator architecture and a suite of large-data-compatible deep-learning algorithms.”

The research team’s prototype will be designed to process the complicated datasets with low power consumption, a challenge faced by current deep learning processors. To help protect the neural network data, the team will also design innovative in-memory encryption schemes. In addition, they will develop data-modeling and statistical-learning algorithms to reduce the costs associated with processing these huge data sets.